本节主要介绍了LeNet、VGGNet、GoogLeNet神经网络。其中,对LeNet模型进行了函数封装,对VGGNet进行了基本介绍,并着重介绍了GoogLeNet神经网络中1×1卷积核的作用。

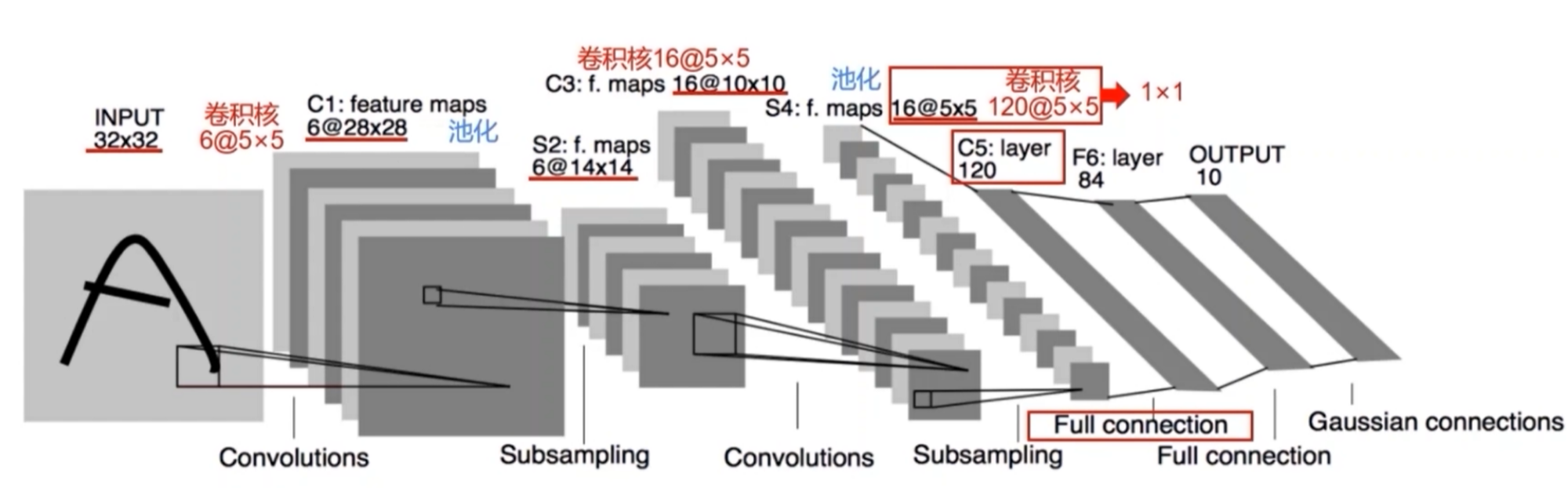

LeNet神经网络

1.网络结构

2.封装LeNet模型

1 | def LeNet(input_shape,padding): |

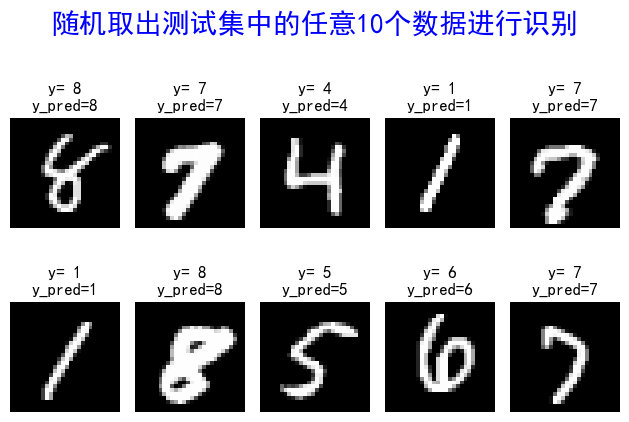

3.实例:LeNet实现手写数字识别

1 | #1.导入库 |

结果如下:

Model: "sequential_9"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_27 (Conv2D) (None, 28, 28, 6) 156

_________________________________________________________________

max_pooling2d_18 (MaxPooling (None, 14, 14, 6) 0

_________________________________________________________________

conv2d_28 (Conv2D) (None, 10, 10, 16) 2416

_________________________________________________________________

max_pooling2d_19 (MaxPooling (None, 5, 5, 16) 0

_________________________________________________________________

conv2d_29 (Conv2D) (None, 1, 1, 120) 48120

_________________________________________________________________

flatten_9 (Flatten) (None, 120) 0

_________________________________________________________________

dense_18 (Dense) (None, 84) 10164

_________________________________________________________________

dense_19 (Dense) (None, 10) 850

=================================================================

Total params: 61,706

Trainable params: 61,706

Non-trainable params: 0

_________________________________________________________________

Epoch 1/5

750/750 [==============================] - 23s 29ms/step - loss: 1.1872 - accuracy: 0.6143 - val_loss: 0.3047 - val_accuracy: 0.9153

Epoch 2/5

750/750 [==============================] - 22s 29ms/step - loss: 0.2160 - accuracy: 0.9393 - val_loss: 0.1392 - val_accuracy: 0.9581

Epoch 3/5

750/750 [==============================] - 22s 29ms/step - loss: 0.1238 - accuracy: 0.9627 - val_loss: 0.0956 - val_accuracy: 0.9721

Epoch 4/5

750/750 [==============================] - 21s 28ms/step - loss: 0.0919 - accuracy: 0.9717 - val_loss: 0.0836 - val_accuracy: 0.9755

Epoch 5/5

750/750 [==============================] - 21s 28ms/step - loss: 0.0759 - accuracy: 0.9766 - val_loss: 0.0788 - val_accuracy: 0.9761

313/313 - 3s - loss: 0.0719 - accuracy: 0.9774

1 | #保存训练的模型参数 |

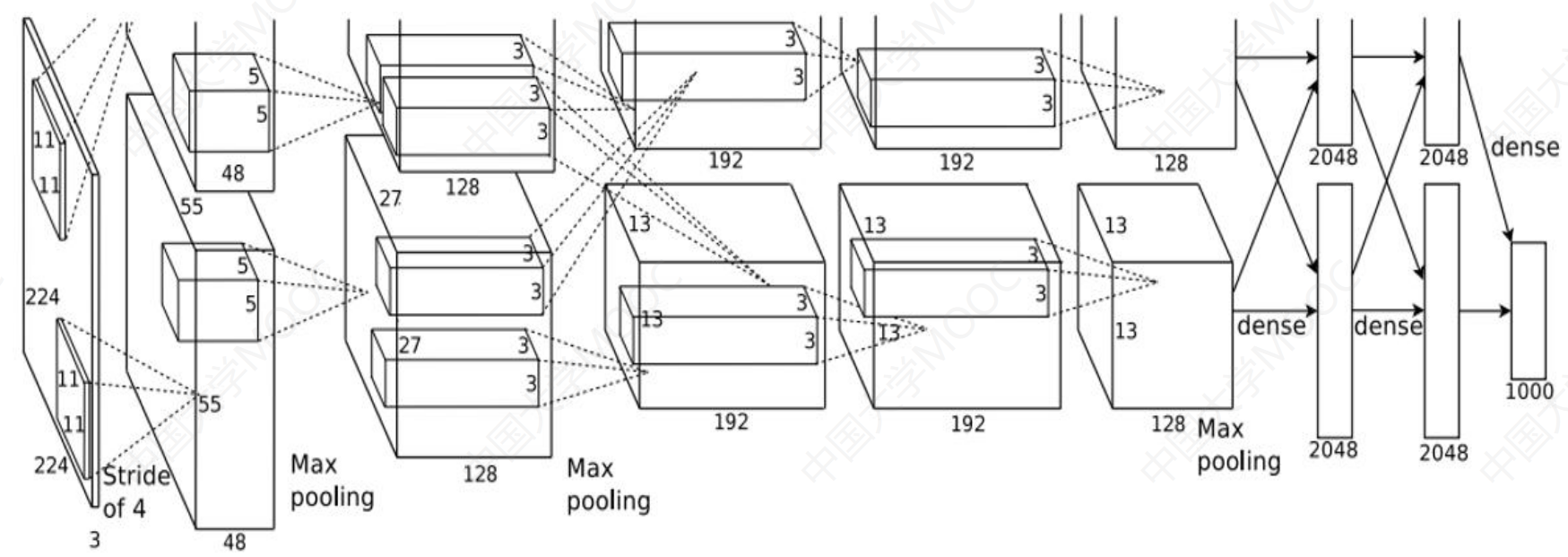

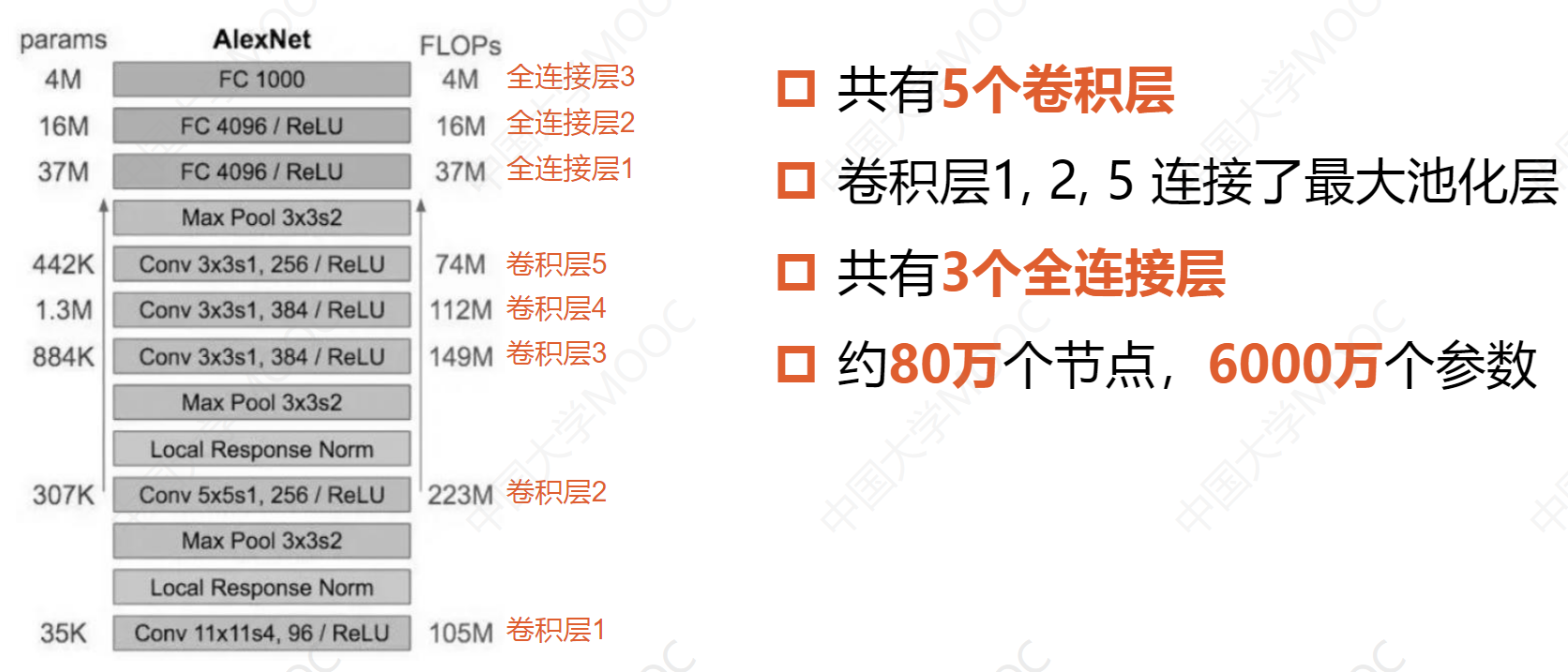

AlexNet神经网络

AlexNet网络结构

AlexNet创新点

- 使用ReLU激活函数:使网络训练收敛更快

- 使用Dropout训练模型

- 数据增强:增加了2048倍的数据量

- 原始图像256×256,随机截取224×224区域

- 水平翻转的镜像

- 使用重叠最大池化:能够很好克服过拟合问题

- 使用GPU加速训练过程

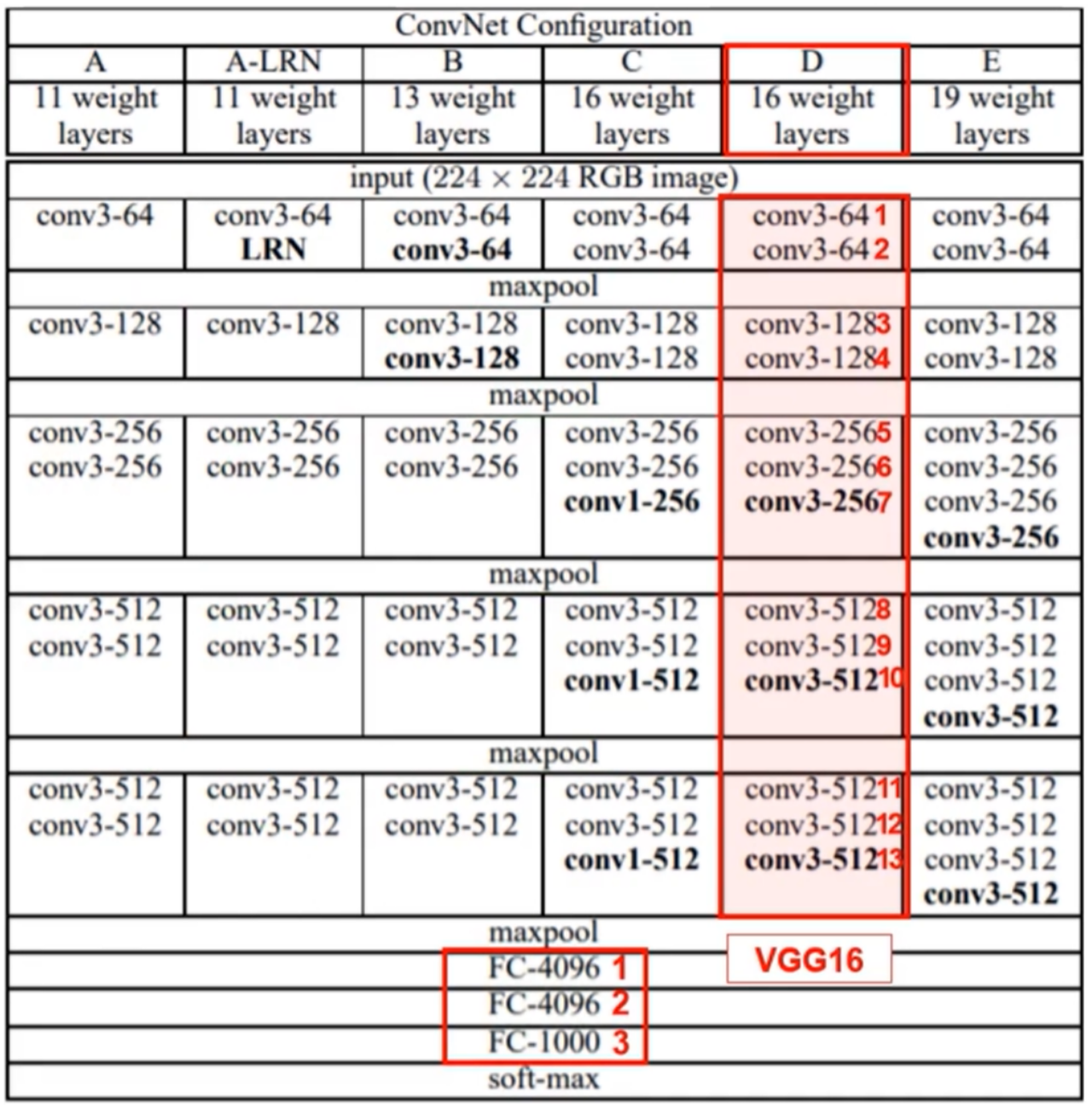

VGGNet神经网络

- 多个小卷积核级联加深网络结构

- 减少网络计算量

- 增加网络的泛化能力和表达能力

- 先训练级别A的简单网络,再复用A网络的权重初始化复杂模型

1 | #VGG16模型的建立 |

结果如下:

Model: "sequential_14"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_56 (Conv2D) (None, 224, 244, 64) 1792

_________________________________________________________________

conv2d_57 (Conv2D) (None, 224, 244, 64) 36928

_________________________________________________________________

max_pooling2d_32 (MaxPooling (None, 112, 122, 64) 0

_________________________________________________________________

conv2d_58 (Conv2D) (None, 112, 122, 128) 73856

_________________________________________________________________

conv2d_59 (Conv2D) (None, 112, 122, 128) 147584

_________________________________________________________________

max_pooling2d_33 (MaxPooling (None, 56, 61, 128) 0

_________________________________________________________________

conv2d_60 (Conv2D) (None, 56, 61, 256) 295168

_________________________________________________________________

conv2d_61 (Conv2D) (None, 56, 61, 256) 590080

_________________________________________________________________

conv2d_62 (Conv2D) (None, 56, 61, 256) 590080

_________________________________________________________________

max_pooling2d_34 (MaxPooling (None, 28, 30, 256) 0

_________________________________________________________________

conv2d_63 (Conv2D) (None, 28, 30, 512) 1180160

_________________________________________________________________

conv2d_64 (Conv2D) (None, 28, 30, 512) 2359808

_________________________________________________________________

conv2d_65 (Conv2D) (None, 28, 30, 512) 2359808

_________________________________________________________________

max_pooling2d_35 (MaxPooling (None, 14, 15, 512) 0

_________________________________________________________________

conv2d_66 (Conv2D) (None, 14, 15, 512) 2359808

_________________________________________________________________

conv2d_67 (Conv2D) (None, 14, 15, 512) 2359808

_________________________________________________________________

conv2d_68 (Conv2D) (None, 14, 15, 512) 2359808

_________________________________________________________________

max_pooling2d_36 (MaxPooling (None, 7, 7, 512) 0

_________________________________________________________________

flatten_14 (Flatten) (None, 25088) 0

_________________________________________________________________

dense_30 (Dense) (None, 4096) 102764544

_________________________________________________________________

dropout_4 (Dropout) (None, 4096) 0

_________________________________________________________________

dense_31 (Dense) (None, 4096) 16781312

_________________________________________________________________

dropout_5 (Dropout) (None, 4096) 0

_________________________________________________________________

dense_32 (Dense) (None, 1000) 4097000

=================================================================

Total params: 138,357,544

Trainable params: 138,357,544

Non-trainable params: 0

_________________________________________________________________

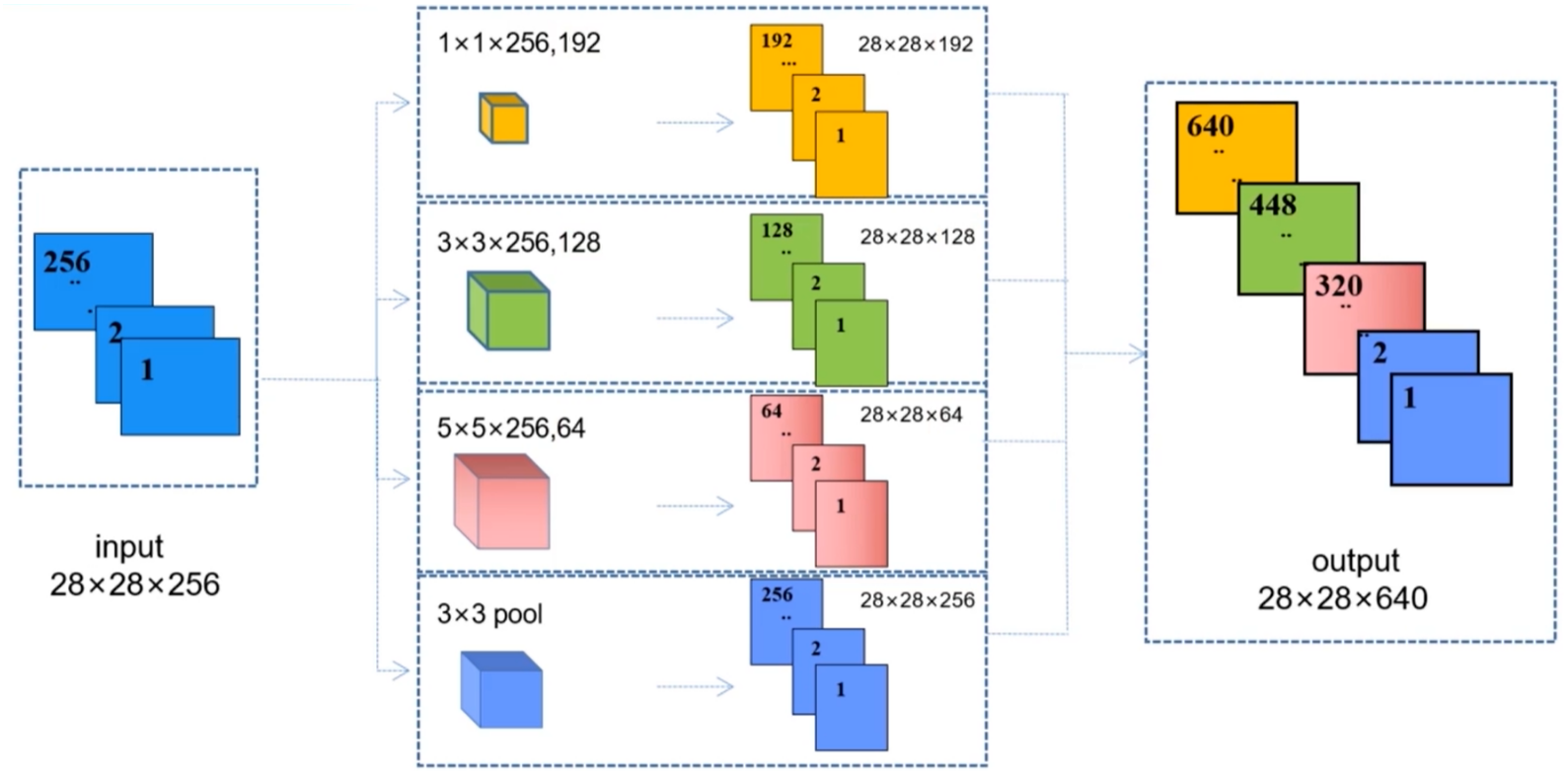

GoogLeNet神经网络

1.Inception Module的初代

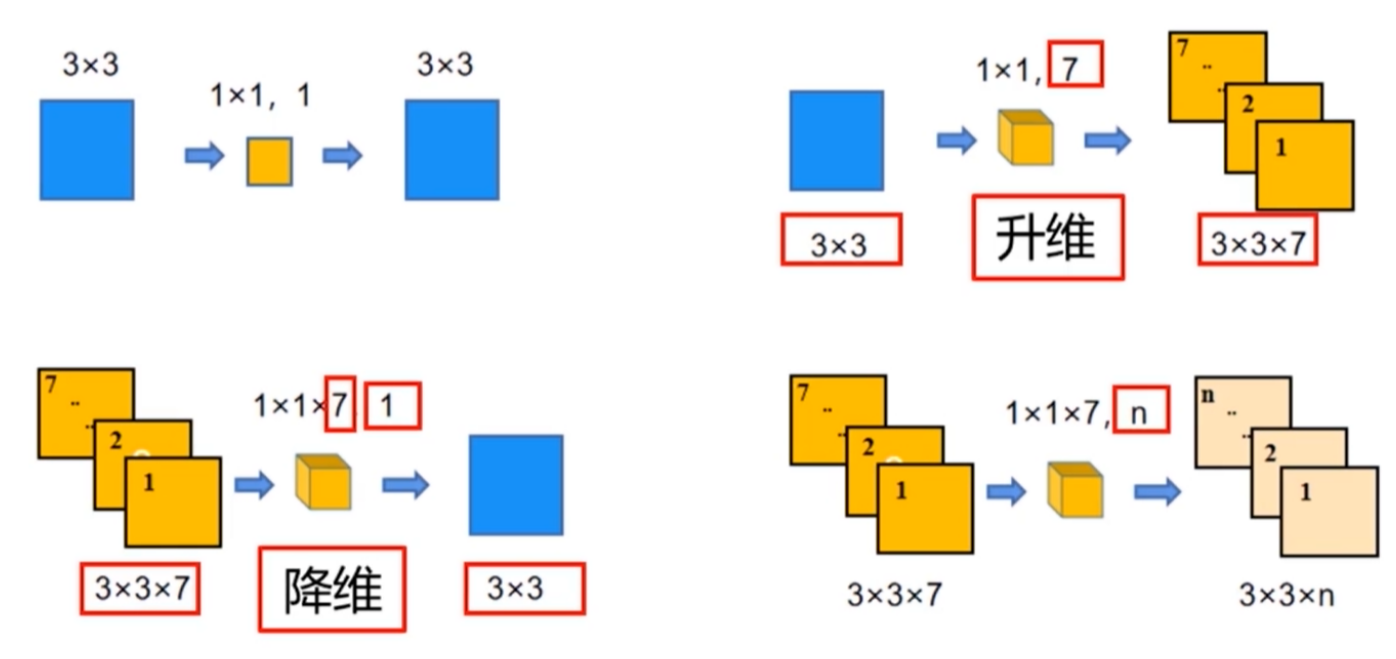

2.1×1卷积核

能够降维/升维,跨通道信息交流

个人对1×1,7与1×1×7,1的理解:前者表示有七个1×1的卷积核,其中每个卷积核都与图像去卷一卷,那么每个卷积核都会输出一个特征图,则一个会输出七个特征图;后者表示一个卷积核,这个卷积核的大小是1×1×7,可以理解为有七个通道,每个通道里都有1×1的一个小卷积核(也就是这7个所谓的小卷积核从不同维度共同组成一个整体的大卷积核,实际上还是只有一个卷积核),这7个小卷积核与前一层中7个对应通道的图像分别卷积,将其结果相加后,最终还是只会得到一个特征图。